Enterprise Reporting & Business Insights Platform Enterprise Reporting & Business Insights Platform |

Enterprise Reporting & Business Insights Platform Enterprise Reporting & Business Insights Platform |

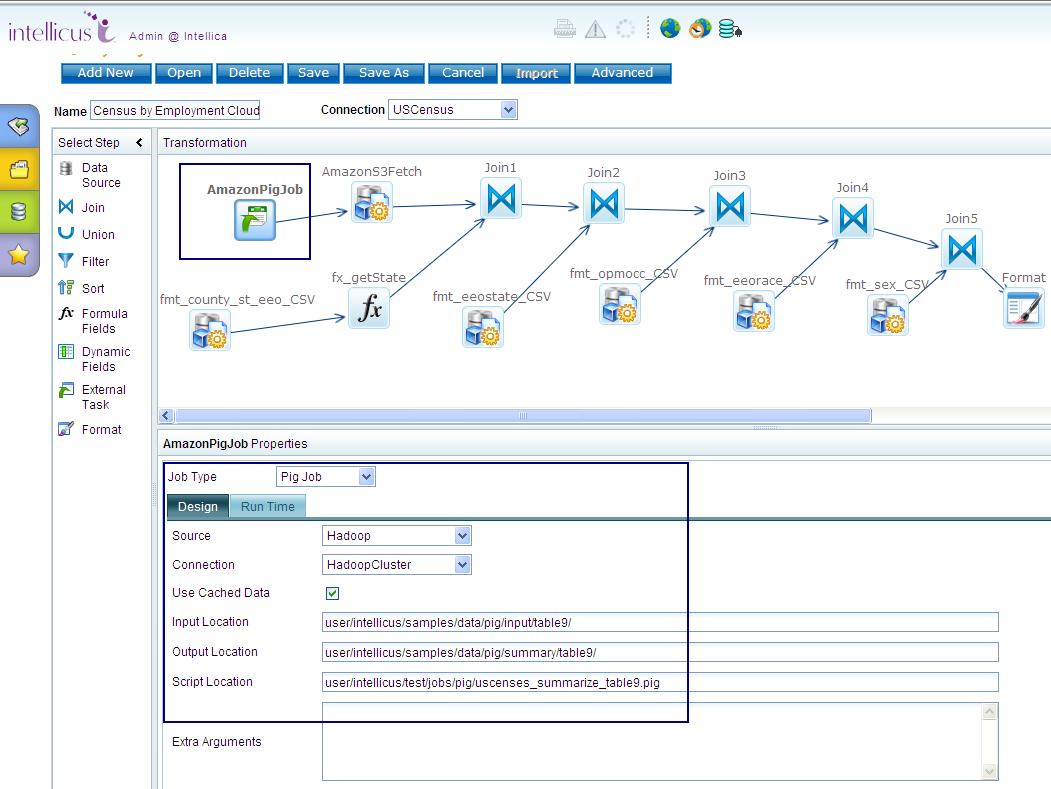

Intellicus now supports invoking AWS or Hive Jobs and perform backend actions, which prepares the data for further analysis. This can be done by choosing External task as a design step in the Query object design.

As shown in the figure above an external step "AmazonPigJob" which is a PIG job will be executed and operations will be performed based on the script at "Script location" and then, the designer can fetch the processed data in Intellicus using the Data Source step named as "AmazonS3Fetch". So if you are using some scripts which preprocess the data before actually analyzing it then you can call those external jobs within Intellicus.

Note:- you need to create Hadoop Cluster or AmazonEC2 connections to execute external tasks on HDFS or AWS respectively.

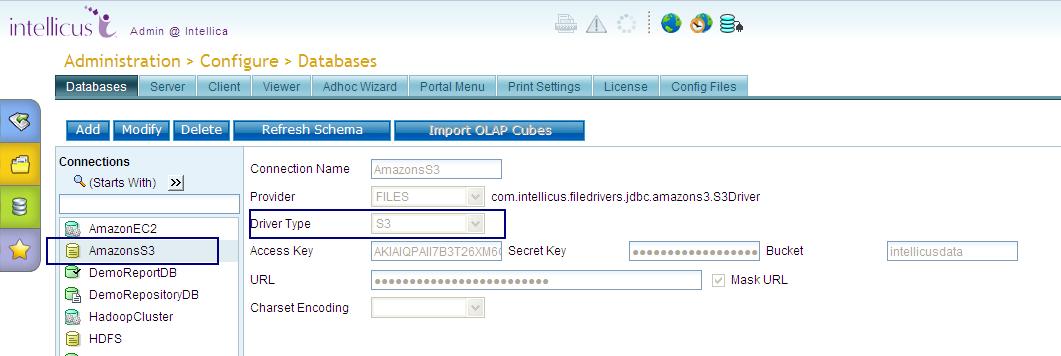

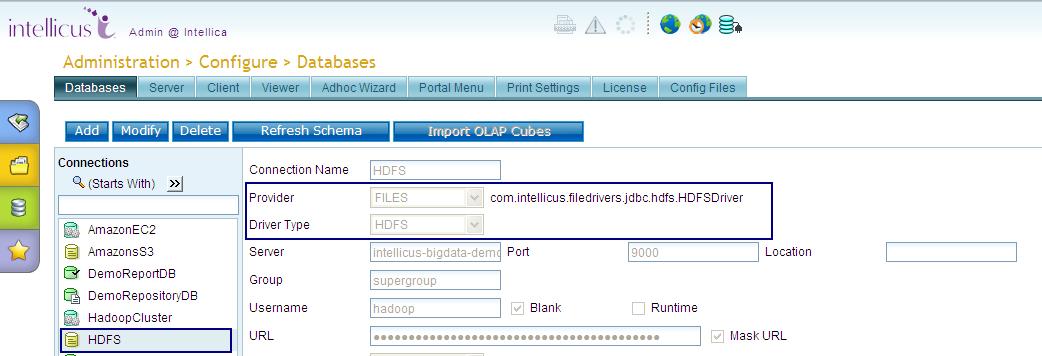

If the Designer does not want any preprocessing to be done by Intellicus and he/she is sure that the data available is ready to be analyzed then he/she can directly connect to those File Sources, be it Amazon S3 files or SEQ files or the Hadoop HDFS files.

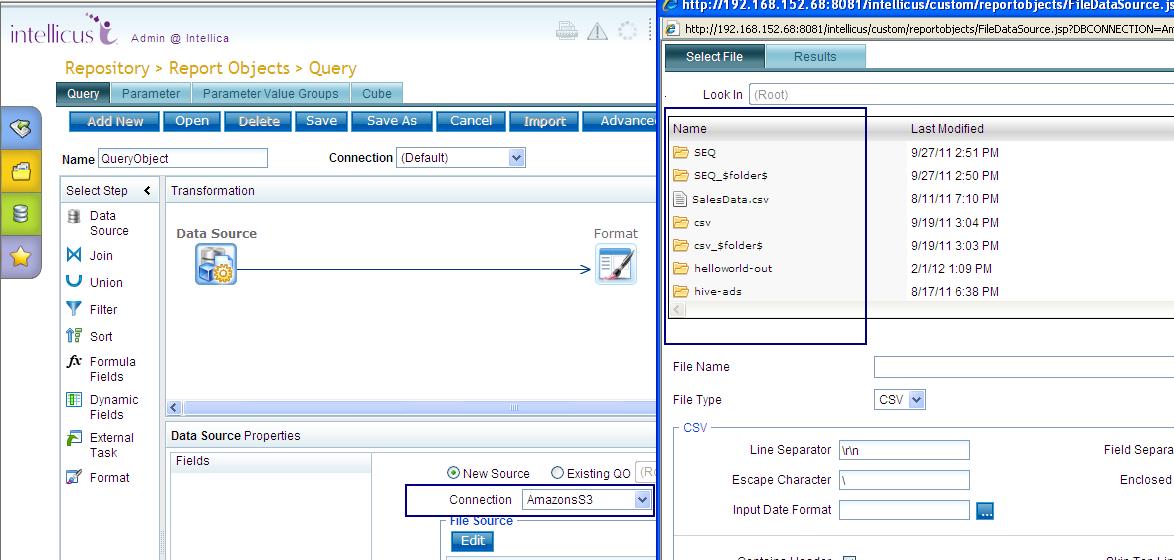

Now when you start creating a Query object using these connection you will get a file/folder listing as shown below and then you can choose which file to use for the analysis and further actions.

Intellicus supports connecting to HBase via Hive. The Administrator needs to make a connection using Hive Version 0.9.0. Rest shall be taken care by the Hive settings.